In a previous blog [3] I discussed how Isilon enables you to create a Data Lake that can serve multiple Hadoop compute clusters with different Hadoop versions and distributions simultaneously. I stated that many workloads run faster on Isilon than on traditional Hadoop clusters that use DAS storage. This statement has recently been confirmed by IDC [2] who ran various Hadoop benchmarks against Isilon and a native Hadoop cluster on DAS. Although I will show their results right at the beginning, the main purpose is to discuss why Isilon is capable of delivering such good results and what the differences are in regard to data distribution and balancing within the clusters.

The test environment

- Cloudera Hadoop Distribution CDH5.

- The Hadoop DAS cluster contained 7 nodes with one master and six worker nodes with eight 10k RPM 300 GB disks.

- The Isilon Cluster was build out of four x410 nodes, each with 57 TB disks and 3,2 TB SSDs and 10 GBE connections.

- For more details see the IDC validation report [2].

NFS access

First of all, IDC tested NFS read and write access and with no surprise, Isilon provides MUCH more throughput even with just 4 nodes.

Figure 1: Runtimes for NFS write and read copy jobs while copying a 10GB file (blocksize is not mentioned but I would assume 1MB or larger)

NFS write turned out to be 4.2 times faster. This is quite important if you want to ingest data via NFS. Read performance is almost 37 times faster.

Hadoop workloads

Three Hadoop workload types have been run and compared by using standard Hadoop benchmarks:- Sequential write using TeraGen

- Mixed read/write using TeraSort

- Sequential read using TeraValidate

Figure 2: Runtimes for three different workloads using TeraGen, TeraSort and TeraValidate

It turns out that the runtimes for the write performance were about 2.6 shorter on Isilon and 1.5 times shorter for the other two workload types. The related throughputs are outlined in the following table.

| Job | Compute + Isilon | Hadoop DAS Cluster |

| TeraGen | 1681 MB/s | 605 MB/s |

| TeraSort | 642 MB/s | 416 MB/s |

| TeraValidate | 2832 MB/s | 1828 MB/s |

The results speak for themselves but let’s look at what techniques OneFS provides to achieve this level of performance advantages over a DAS cluster.

The anatomy of file reads on Isilon

Although IOs on a DAS cluster are distributed across all nodes, an individual 64 MB block is served by a single node in the cluster. This is different on Isilon where the load distribution works more granular. The steps for a read on Isilon can be described as follows.- The compute node sends HDFS metadata request to the Name Node service which runs on all Isilon nodes (no SPoF)

- The Name Node service will return the IP addresses and block numbers of any 3 Isilon nodes in the same rack as the compute node. This provides effective rack locality.

- The Compute node sends HDFS 64 MB block read request to the Data Node service on the first Isilon node returned.

- The contacted Isilon node will retrieve through the internal Infiniband network all 128 KB Isilon blocks that comprise the 64 MB HDFS block. The blocks will be read from disks if they are not already stored in the L2 cache. As said above, this is fundamentally different than on a DAS cluster where the whole 64 MB block is read from one node only. That means the IO on Isilon is served by much more disks and CPUs than on the DAS cluster.

- The contacted Isilon node will return the entire HDFS block to the calling compute node.

The anatomy of file writes on Isilon

When a client requests that a file be written to the cluster, the node to which the client is connected is the node that receives and processes the file.- That node creates a write plan for the file including calculating FEC (this is much more space efficient compared to a DAS cluster where we typically do 3 copies of each block for data protection)

- Data blocks assigned to the node are written to the NVRAM of that node. The NVRAM cards are special for Isilon and not available on DAS clusters.

- Data blocks assigned to other nodes travel through the Infiniband network to their L2 cache, and then to their NVRAM.

- Once all nodes have all the data and FEC blocks in NVRAM a commit is returned to the client. That means, we do not need to wait until the data is written to disks as all IOs are securely buffered by NVRAM on Isilon.

- Data block(s) assigned to this node stay cached in L2 for future reads of that file.

- Data is then written onto the spindles.

The myth of disk locality importance for Hadoop

We sometimes hear objections from admins who claim that disk locality is critical for Hadoop. But remember that traditional Hadoop was designed for slow star networks which typically operated at 1 Gb/s. The only way to effectively deal with slow networks was to strive to keep all IO local to the server (disk locality).There are several facts that make disk locality irrelevant:

I. Fast networks are standard today.

- Today, a single non-blocking 10 Gbps switch port (up to 2500 MB/sec full duplex) can provide more bandwidth than a typical disk subsystem with 12 disks (360 – 1200 MB/sec).

- We are no longer constrained to maintain data locality in order to provide adequate I/O bandwidth.

- Isilon provides rack-locality, not disk-locality. This reduces the Ethernet traffic between racks.

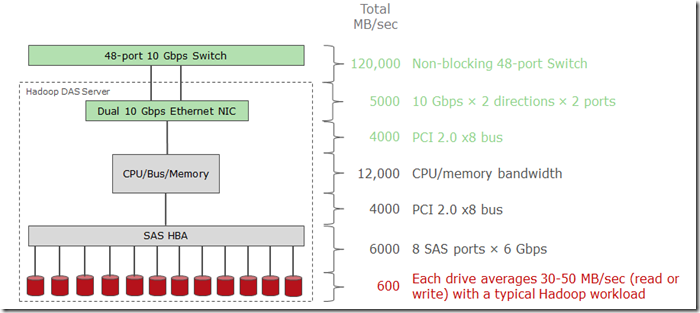

Figure 3: IO path in a DAS architecture. Considering a non-blocking 10 Gbps network it is obvious that the network is not the bottleneck. Even if we would double the number of disks in the system, the disks remain the bottleneck. As a result, disk locality is irrelevant for most workloads.

II. Disk locality is lost under several common situations:

- All nodes of a DAS cluster with a replica of the block are running the maximum number of tasks. This is very common for busy clusters!

- Input files are compressed with a non-splitable codec such as gzip.

- “Analysis of Hadoop jobs from Facebook [1] underscores the difficulty in attaining disk-locality: overall, only 34% of tasks run on the same node that has the input data.”

- Disk locality provides very low latency IO, however this latency has very little effect for batch operations such as MapReduce.

III. Data replication for performance

- For very busy traditional clusters, a high replication count may be needed for hot files that are used often by many concurrent tasks. This is required for data locality and high concurrent reads.

- On Isilon, a high replication count is not required because:

a) Data locality is not required and

b) Reads are split evenly over many Isilon nodes with a globally coherent cache, providing very high concurrent read performance

Other Isilon performance relevant technologies

As mentioned earlier, OneFS is very mature and has been designed for more than a decade for high throughput and low latency for multi-protocol access. You can google a number of articles and papers describing relevant features. I’ll just give some keywords here:- All writes are buffered by redundant NVRAM. This makes writes extremely fast

- OneFS provide a L1 cache, a globally coherent L2 cache and L3 caches on SSDs for accelerated reads

- Access patterns can be configured per cluster, pool or even on directory level to optimize and balance pre-fetching. Patterns are random, concurrent or streaming.

- Meta data acceleration is provided by the L3 cache or can be configured alternatively. OneFS will store all filesystem meta data on SSDs

Summary

Isilon is a scale-out NAS system with a distributed filesystem that has been built for massive throughput requirements and workloads like Hadoop. HDFS is implemented as a protocol and Name Node as well as Data Node services are delivered in a highly available manner by all Isilon nodes. IDCs performance validation [2] showed up to 2.5 times higher performance compared to a DAS cluster. Due to modern networking technologies, the often referenced disks locality is irrelevant for Hadoop on Isilon. Besides the better performance, there are many other advantages that Isilon provides, such as the much higher capacity efficiency and many enterprise storage features. Furthermore, storage and compute nodes can be scaled independently and you can access the same data with different Hadoop versions and distributions simultaneously.References

[1] Disk-Locality in Datacenter Computing Considered Irrelevant, Ganesh Ananthanarayanan, University of California, Berkeley[2] EMC Isilon Scale-out Data Lake Foundation – Essential Capabilities for Building Big Data Infrastructure, IDC White Paper, October 2014

[3] How to access data with different Hadoop versions and distributions simultaneously, Stefan Radtke, Blog post 2015

[4] EMC Isilon OneFS – A Technical Overview; White Paper, November 2013

The White Papers mentioned here are all available for download at https://support.emc.com

There are many institutes for hadoop allover, however many people and from countries like Russia are preferring hadoop online training in India.

ReplyDeleteGood read. Stefan, have you ever tried implementing running HDFS on top of an existing NFS? Any performance metrics for that? I'm aware of concepts like NFS gateway, but was just curious if you've ever tried it.

ReplyDeleteHi Ahab, no, never tried and for the technology described here, it is never required because we provide native access via NFS *and* HDFS at the same time, max speed. No need for a gateway layer or the like.

DeleteThis article describes the Hadoop Software, All the modules in Hadoop are designed with a fundamental assumption that hardware failures are common and should be automatically handled by the framework. This post gives great idea on Hadoop Certification for beginners. Also find best Hadoop Online Training in your locality at StaygreenAcademy.com

ReplyDeleteGood read.

ReplyDeleteweblogic training in chennai

Thanks for providing this informative information you may also refer.

ReplyDeletehttp://www.s4techno.com/blog/2016/08/13/installing-a-storm-cluster/

Hadoop Training in Hyderabad

ReplyDeleteThx for sharing information

ReplyDeleteHadoop Training in Hyderabad

Just found your post by searching on the Google, I am Impressed and Learned Lot of new thing from your post. I am new to blogging and always try to learn new skill as I believe that blogging is the full time job for learning new things day by day.

ReplyDelete"Emergers Technologies"

Thank you so much for sharing this worth able content with us. The concept taken here will be useful for my future programs and i will surely implement them in my study. Keep blogging article like this.

ReplyDeleteHadoop Training In Chennai

Good Post! Thank you so much for sharing this pretty post, it was so good to read and useful to improve my knowledge as updated one, keep blogging…

ReplyDeleteHadoop Online Training

Automotive tools suppliers in Singapore, automotive tools traders in Singapore, Hand Tools, Shop Equipment, Auto Lifts, Lift Parts and Lift Accessories Complete coverage with parts breakdowns for easy ordering. VIEW MORE - Automotive tools

ReplyDeleteGreat Blog Thanks for sharing ...... such a helpful information keep sharing these type of blogs.

ReplyDeleteHadoop Training in Hyderabad

actually we want to know about the cloud computing. Because it is much necessary subject which will helps us to groove in IT industry. So we want to know more about this. So please keep update like this.

ReplyDeleteHadoop Training in Chennai

Base SAS Training in Chennai

actually we want to know about the cloud computing. Because it is much necessary subject which will helps us to groove in IT industry. So we want to know more about this. So please keep update like this.

ReplyDeleteHadoop Training in Chennai

Base SAS Training in Chennai

It's Amazing content thanks for providing for us..........

ReplyDeleteThanks for one marvelous posting! I enjoyed reading it; you are a great author. I will make sure to bookmark your blog and may come back someday. I want to encourage that you continue your great posts

ReplyDeleteHadoop Training in Bangalore

Thanks for sharing well blog Hadoop training in training

ReplyDeleteAWS training in bangalore

Tableau training in bangalore

PHP training in bangalore

Android training in bangalore

Digital marketing training in bangalore

Nice blog Devops training in bangalore

ReplyDeleteMachine leraning training in Bangalore

Iot Training in Bangalore

Great articles, first of all Thanks for writing such lovely Post! Earlier I thought that posts are the only most important thing on any blog. But here a Shout me loud found how important other elements are for your blog.Keep update more posts..

ReplyDeleteMSBI Training in Chennai

Informatica Training in Chennai

Dataware Housing Training in Chennai

very nice blog hadoop and bigdata training in bangalore

ReplyDeleteExcellent blog on Comparing Hadoop performance on DAS and Isilon and why disk locality is irrelevant

ReplyDeletekeep blogging more thank you

Artificial Intelligence Training in Bangalore

Devops Training in Bangalore

Informatica interview questions

Nice Blog

ReplyDeleteIot Training in Bangalore

Iteanz

Artifiacial Intelligence Training in Bangalore

nice blog Machine learning training

ReplyDeleteReal Estate Research in Chennai

ReplyDeleteReal Estate Tax Advisor in Chennai

Portfolio Management Services in Chennai

Really useful post about hadoop, i have to know information about hadoop online training institute in india.

ReplyDeletevery helpfull blog it was a pleasure reading your blog

ReplyDeletewould love to read it more

knowldege is not found but earned through hardwork and good teaching

that being said click here to join us the next best thing in bangalore

devops online training

Devops Training in Bangalore

Hello,

ReplyDeleteThis is nice blog and sharing informative content .Thankyou

performance parts dubai

This comment has been removed by the author.

ReplyDeleteNice blog and absolutely outstanding. You can do something much better but i still say this perfect.Keep trying for the best. Hadoop development services in India

ReplyDeletenice information keep updating the post Hadoop Admin Online Course

ReplyDeleteawesome post,keep it update post..Hadoop Online Training In USA!

ReplyDeleteHadoop Online Training In India!

Hadoop Online Training In Hyderabad!

This information you provided in the blog that is really unique I love it!! Thanks for sharing such a great blog. Keep posting..

ReplyDeleteHadoop training

Hadoop Course

Hadoop training institute

nice blog...Hadoop training in Hyderabad!

ReplyDeleteHadoop training in Madhapur!

Hadoop training in India!

I simply wanted to write down a quick word to say thanks to you for those wonderful tips and hints you are showing on this site.

ReplyDeleteBest Hadoop Training Institute In chennai

amazon-web-services-training-institute-in-chennai

Needed to compose you a very little word to thank you yet again regarding the nice suggestions you’ve contributed here.

ReplyDeleteDevops Training in Chennai

Those guidelines additionally worked to become a good way to recognize that other people online have the identical fervor like mine to grasp great deal more around this condition. Best AWS Training in Bangalore

ReplyDeleteoutstanding. You can do something much better but i still s.Very Nice Blog. Keep it up. I have also some good blogs check it. Web Development in Pakistan

ReplyDeleteInsurance Terms

Tech Free Tricks

Everything Is Here Online

Health Fitness Tricks

Insurance NEW YORK

SEO AND WEb Books World Online

Thanks a lot very much for the high quality and results-oriented help. I won’t think twice to endorse your blog post to anybody who wants and needs support about this area.

ReplyDeleteamazon web services training in bangalore

Great Blog. Now I can get clear idea about this. Thank you.

ReplyDeleteDot Net Training in Chennai

Dot Net Course in Chennai

Thanks for sharing Good Information

ReplyDeleteBig data Hadoop Online Training Bangalore

It is a great post. Keep updating such kind of worthy information.

ReplyDeleteCloud computing Training in Chennai | Cloud computing courses in Chennai

Nice Blog..

ReplyDeleteBest Big Data and Hadoop Training in Bangalore

Mastering Machine Learning

Artificial intelligence training in Bangalore

AWS Training in Bangalore

Blockchain training in bangalore

Python Training in Bangalore

I really enjoy the blog.Much thanks again. Really Great salesforce Online course Bangalore.

ReplyDeleteThanks for sharing excellent blogHadoop Training In Hyderabad

ReplyDeleteNice blogs about Mastering Python With Mobile Testing at The Mastering Python With Mobile Testing training in bangalore

ReplyDeleteHi Thank you for sharing such a nice information on your blog on Hadoop Big Data. We all very happy check out your blog one of the informative and recommended blog.

ReplyDeleteWe are expecting more blogs from you.

Thank you

hadoop big data classes in pune

big data training in pune

big data certification in pune

big data testing classes

Your Blog is very nice! Hadoop training in Hyderabad

ReplyDeleteHadoop is a highly valuable skill for anyone working with large amounts of data

Thanks for posting such a great article.you done a great job MSBI online course Bangalore

ReplyDeleteExcellent article. Very interesting to read. I really love to read such a nice article. Thanks! keep rocking. Big data hadoop online Course Hyderabad

ReplyDelete

ReplyDeleteThis idea is a decent method to upgrade the knowledge.thanks for sharing

Bigdata hadoop online training in Hyderabad

Hadoop training in Hyderabad

online hadoop training in Hyderabad

"• Nice and good article. It is very useful for me to learn and understand easily. Thanks for sharing your valuable information and time. Please keep updating IOT Online Training

ReplyDelete"

Great blog, Its really give such wonderful information, that was very useful for me. Thanks for sharing with us.

ReplyDeleteDot Net Training in Chennai

Thank you a lot for providing individuals with a very spectacular possibility to read critical reviews from this site.

ReplyDeleteEmbedded System training in Chennai | Embedded system training institute in chennai | PLC Training institute in chennai | IEEE final year projects in chennai | VLSI training institute in chennai

Please let me know if you’re looking for an author for your site. You have some great posts, and I think I would be a good asset.

ReplyDeletesafety courses in chennai

Really you have done great job,There are may person searching about that now they will find enough resources by your post

ReplyDeleteselenium training in electronic city | selenium training in electronic city

It is amazing and wonderful to visit your site.Thanks for sharing this information,this is useful to me...

ReplyDeleteSelenium online Training | Selenium Training in Pune | Selenium Training in Bangalore

The blog is so interactive and Informative , you should write more blogs like this Hadoop Admin Online Training Bangalore

ReplyDeleteYou have provided a nice article, Thank you very much for this. I hope this will be useful for many people. Please keep on updating these type of blogs with good content.Thank You...

ReplyDeleteaws online training

aws training in hyderabad

aws online training in hyderabad

Great thoughts you got there, believe I may possibly try just some of it throughout my daily life.

ReplyDeleteJava training in Chennai | Java training in Annanagar | Java training in Chennai

Java training in Chennai | Java training in Bangalore | Java training in Electronic city

I was recommended this web site by means of my cousin. I am now not certain whether this post is written through him as nobody else recognise such precise about my difficulty. You're amazing! Thank you!

ReplyDeleteData Science course in rajaji nagar | Data Science with Python course in chenni

Data Science course in electronic city | Data Science course in USA

Data science course in pune | Data Science Training institute in Pune | Data science course in kalyan nagar | Data Science Course in Bangalore

I think this was one of the most interesting content I have read today. Please keep posting.

ReplyDeleteSelenium Training in Chennai

Selenium Course in Chennai

iOS Course in Chennai

Digital Marketing Training in Chennai

J2EE Training in Chennai

DOT NET Course in Chennai

DOT NET Training Institute in Chennai

This is a good post. This post give truly quality information. I’m definitely going to look into it. Really very useful tips are provided here. thank you so much. Keep up the good works.

ReplyDeletepython course institute in bangalore

python Course institute in bangalore

python course institute in bangalore

Your good knowledge and kindness in playing with all the pieces were very useful. I don’t know what I would have done if I had not encountered such a step like this.

ReplyDeleteangularjs Training in chennai

angularjs-Training in pune

angularjs-Training in chennai

angularjs Training in chennai

angularjs-Training in tambaram

Very good blog, thanks for sharing such a wonderful blog with us. Keep sharing such worthy information to my vision.

ReplyDeleteDevOps Training in Chennai

DevOps course in Chennai

DevOps Certification Chennai

RPA courses in Chennai

Angularjs Training in Chennai

AWS course in Chennai

Thanks for your efforts in sharing this effective tips to my vision. kindly keep doing more. Waiting for more updates.

ReplyDeleteFrench Institute in Chennai

French Training Institute near me

Spanish Training in Chennai

Spanish Language Training Institute near me

Spanish Language in Chennai

Japanese Course in Chennai

Japanese Institute in Chennai

Informative post, thanks for taking time to share this page.

ReplyDeleteccna Training in Adyar

ccna Training in Chennai

ccna course in Chennai

ccna Training Chennai

ccna Training institutes in Chennai

ccna course near me

It is amazing and wonderful to visit your site.Thanks for sharing this information,this is useful to me...

ReplyDeleteData Science training in chennai | Best Data Science training in chennai

Data Science training in OMR | Data science training in chennai

Data Science training in chennai | Best Data science Training in Chennai

Data science training in velachery | Data Science Training in Chennai

Data science training in tambaram | Data Science training in Chennai

Data Science training in anna nagar | Data science training in Chennai

I believe that your blog will surely help the readers who are really in need of this vital piece of information. Waiting for your updates.

ReplyDeleteTOEFL Coaching Centres in Ambattur Chennai

TOEFL Classes in Ambattur OT

TOEFL Course in Redhills

TOEFL Training near me

Best TOEFL Coaching Institute in velachery

TOEFL Coaching Classes in Tharamani

TOEFL Coaching Centres in Madipakkam

Thanks for sharing such a nice info.I hope you will share more information like this. Please

ReplyDeletekeep on sharing!

Guest posting sites

Technology

Brilliant ideas that you have share with us.It is really help me lot and i hope it will help others also.update more different ideas with us.

ReplyDeleteBest Java Training Institutes in Bangalore

Java Training in Thirumangalam

Java Training in Vadapalani

Java Training in Perungudi

Thanks for the great post on your blog, it really gives me an insight on this topic.I must thank you for this informative ideas. I hope you will post again soon.

ReplyDeletedevops training institutes in bangalore

devops institute in bangalore

devops Certification Training in Anna nagar

devops Training in Ambattur

Good Post! Thank you so much for sharing this pretty post, it was so good to read and useful to improve my knowledge as updated one, keep blogging.

ReplyDeleterpa training in Chennai | rpa training in bangalore | best rpa training in bangalore | rpa course in bangalore | rpa training institute in bangalore | rpa online training

such a valuable blog!!! thanks for the post.

ReplyDeleteSelenium Training in Chennai

selenium Classes in chennai

iOS Training in Chennai

French Classes in Chennai

Big Data Training in Chennai

cloud computing training in chennai

Cloud Computing Courses in Chennai

Thanks for your efforts in sharing this information in detail. This was very helpful to me. Kindly keep

ReplyDeletecontinuing the great work.

Education

Technology

I was recommended this web site by means of my cousin. I am now not certain whether this post is written through him as nobody else recognise such precise about my difficulty. You're amazing! Thank you!

ReplyDeleteangularjs Training in bangalore

angularjs interview questions and answers

angularjs Training in marathahalli

angularjs interview questions and answers

angularjs-Training in pune

angularjs Training in bangalore

Thanks a lot for sharing us about this update. Hope you will not get tired on making posts as informative as this.

ReplyDeleteData Science training in Chennai | Data Science Training Institute in Chennai

Data science training in Bangalore | Data Science Training institute in Bangalore

Data science training in pune | Data Science training institute in Pune

Data science online training | online Data Science certification Training-Gangboard

Data Science Interview questions and answers

Data Science Tutorial

Great post

ReplyDeleteblockchain training in Bangalore

Amazing Post. The idea you have shared is very interesting. Waiting for your future postings.

ReplyDeletePrimavera Training in Chennai

Primavera Course in Chennai

Primavera Software Training in Chennai

Best Primavera Training in Chennai

Primavera p6 Training in Chennai

Comparison performance with DAS and Isilon is really useful for Hadoop Training in Bangalore

ReplyDelete

ReplyDeleteExtra-ordinary post. Looking for an information like this for a long time. Thanks for Posting.

Pega training in chennai

Pega course in chennai

Primavera Training in Chennai

Primavera Course in Chennai

the blog is nicely maintained by author.each and every information is very useful and helpful for me.

ReplyDeleteAmazon web services Training in Chennai

AWS Training in Chennai

Data Science Course in Chennai

Data Science Training in Chennai

Nice article. I liked very much. All the informations given by you are really helpful for my research. keep on posting your views.

ReplyDeleteHadoop Training in Chennai

Big Data Training in Chennai

Angularjs Training in Chennai

Web Designing Course in chennai

PHP Training in Chennai

hadoop training in Velachery

hadoop training in Adyar

Wonderful post very useful for me

ReplyDeletebest ccna training in chennai

Have you been thinking about the power sources and the tiles whom use blocks I wanted to thank you for this great read!! I definitely enjoyed every little bit of it and I have you bookmarked to check out the new stuff you post

ReplyDeleterpa training in bangalore

rpa training in pune

rpa online training

best rpa training in bangalore

Some us know all relating to the compelling medium you present powerful steps on this blog and therefore strongly encourage

ReplyDeletecontribution from other ones on this subject while our own child is truly discovering a great deal.

Have fun with the remaining portion of the year.

Selenium training in Chennai

Selenium training in Bangalore

Selenium training in Pune

Selenium Online training

Selenium training in bangalore

very good to read thanks

ReplyDeletecloud computing training course in chennai

Awesome post with lots of data and I have bookmarked this page for my reference. Share more ideas frequently.

ReplyDeleteBlue Prism Training in Chennai

Blue Prism Training Institute in Chennai

UiPath Training in Chennai

Blue Prism Training in Anna Nagar

Blue Prism Training in T Nagar

Thank you for excellent article.

ReplyDeletePlease refer below if you are looking for best project center in coimbatore

final year projects in coimbatore

Spoken English Training in coimbatore

final year projects for CSE in coimbatore

final year projects for IT in coimbatore

final year projects for ECE in coimbatore

final year projects for EEE in coimbatore

final year projects for Mechanical in coimbatore

final year projects for Instrumentation in coimbatore

Very nice post here thanks for it .I always like and such a super contents of these post.Excellent and very cool idea and great content of different kinds of the valuable information's.

ReplyDeleteCheck out : Machine learning training in chennai

machine learning with python course in chennai

machine learning course in chennai

best training insitute for machine learning

Wow blog is very usfull to give the information thank you

ReplyDeleteHadoop Online Training

Datascience Online TRaining

ReplyDeleteThank you for your post. This is excellent information. It is amazing and wonderful to visit your site.

Even we r on same page. could u just give a review.

Hadoop online training

Best Hadoop online training

Hadoop online training in Hyderabad

Hadoop online training in india

This information is really awesome thanks for sharing most valuable information.

ReplyDeleteDevops Training in Chennai | Devops Training Institute in Chennai

Thanks for providing useful article containing valuable information.start learning Workday Online Training | Workday HCM Online Training.

ReplyDeleteWorkday HCM Online Training

Thanking for providing the best article blog having good information that is helpful and useful for everyone.you can also learn Big Data Hadoop Online Training.

ReplyDeleteBig Data and Hadoop Training In Hyderabad

ReplyDeleteI enjoyed over read your blog post. Your blog have nice information, I got good ideas from this amazing blog. I am always searching like this type blog post. I hope I will see again.

Best Ice Fishing Gloves Best Ice Fishing Gloves Best Ice Fishing Gloves

Blog is very nice

ReplyDeleteData Science Training in Hyderabad

Hadoop Training in Hyderabad

Good to read thanks for the author.

ReplyDeleteR Language Training in Chennai

Wonderful Post. Brilliant piece of work. It showcases your in-depth knowledge. Thanks for Sharing.

ReplyDeleteIonic Training in Chennai

Ionic Course in Chennai

Ionic Training

Ionic Corporate Training

Ionic Training Institute in Chennai

Ionic Training in OMR

Ionic Training in Anna Nagar

Ionic Training in T Nagar

I really loved reading through this article...

ReplyDeleteData Science Training in Hyderabad

Hadoop Training in Hyderabad

Turite puikų straipsnį. Linkiu jums gražios naujos dienos

ReplyDeleteThông tin mới nhất về cửa lưới chống muỗi

Siêu thị cửa chống muỗi

Hé mở thông tin cửa lưới chống muỗi xếp

Phòng chống muỗi cho biệt thư ở miền Nam

Great going. Amazing Post. Extra-ordinary work. Thanks for sharing.

ReplyDeleteXamarin Training in Chennai

Xamarin Course in Chennai

Xamarin Training

Xamarin Course

Xamarin Training Course

Xamarin Training in Adyar

Xamarin Training in Velachery

Xamarin Training in Tambaram

Amazing display of talent. It shows your in-depth knowledge. Thanks for sharing.

ReplyDeleteNode JS Training in Chennai

Node JS Course in Chennai

Node JS Training Institutes in chennai

Node JS Course

Node JS Training in T Nagar

Node JS Training in Anna Nagar

Node JS Training in Porur

Node JS Training in Adyar

I am very happy to visit your blog. This is definitely helpful to me, eagerly waiting for more updates.

ReplyDeleteMachine Learning Course in Chennai

Machine Learning Training in Chennai

R Training in Chennai

R Programming Training in Chennai

Machine Learning Training in Chennai

Hey, would you mind if I share your blog with my twitter group? There’s a lot of folks that I think would enjoy your content. Please let me know. Thank you.

ReplyDeleteAutomation anywhere Training in Chennai | Best Automation anywhere Training in Chennai

uipath training in chennai | Best uipath training in chennai

Blueprism Training in Chennai | Best Blueprism Training in Chennai

Rprogramming Training in Chennai | Best Rprogramming Training in Chennai

Machine Learning training in chennai | Best Machine Learning training in chennai

Machine Learning Training in Chennai | Machine Learning Training Institute in Chennai

Devops Training in Chennai | Devops Training Institute in Chennai

Data Science Training in Chennai | Data Science Course in Chennai

Selenium Training in Chennai | Selenium Training Institute in Chennai

Nice information, valuable and excellent blog, thanks for such a helpful information.

ReplyDeleteExcelR Data Science in Bangalore

Thank u so much sharing information like this it is the best blog for student and they can also read more:

ReplyDeletedata analytics certification courses in Bangalore

Attend The Python training in bangalore From ExcelR. Practical Python training in bangalore Sessions With Assured Placement Support From Experienced Faculty. ExcelR Offers The Python training in bangalore.

ReplyDeletepython training in bangalore

It is extremely nice to see the greatest details presented in an easy and understanding manner.

ReplyDeleteData Science Course in Pune

I am impressed by the information that you have on this blog. It shows how well you understand this subject..

ReplyDeletemachine learning course in bangalore

Yangi ma'lumotlar, sizga katta rahmat.

ReplyDeleteLều xông hơi khô

Túi xông hơi cá nhân

Lều xông hơi hồng ngoại

Mua lều xông hơi

It should be noted that whilst ordering papers for sale at paper writing service, you can get unkind attitude. In case you feel that the bureau is trying to cheat you, don't buy term paper from it.

ReplyDeletetop 7 best washing machine

www.technewworld.in

ReplyDeleteGreat post i must say and thanks for the information. Education is definitely a sticky subject. However, is still among the leading topics of our time. I appreciate your post and look forward to more.

www.technewworld.in

How to Start A blog 2019

Eid AL ADHA

Nice Blog! Really Intresting and had gone through this article, keep blogging..

ReplyDeleteTableau Training In Hyderabad

Hadoop Training In Hyderabad

I liked the way you write article .

ReplyDeleteCheck one of the best training institute in Bangalore

Networking training institute in Bangalore

Really nice post. Provided a helpful information. I hope that you will post more updates like this

ReplyDeleteAWS Online Training

AI Training

Big Data Training

I just got to this amazing site not long ago. I was actually captured with the piece of resources you have got here. Big thumbs up for making such wonderful blog page! How to increase domain authority in 2019

ReplyDeletewww.technewworld.in

This is also a very good post which I really enjoyed reading. It is not every day that I have the possibility to see something like this..

ReplyDeletemachine learning course malaysia

Your blog provided us with valuable information to work with. Each & every tips of your post are awesome. Thanks a lot for sharing. Keep blogging,

ReplyDeleteI am impressed by the information that you have on this blog. It shows how well you understand this subject.

ReplyDelete

ReplyDeleteThanks for sharing NAS storage dubai

ReplyDeleteThanks for sharing NAS storage dubai

THANKS FOR SHARING SUCH A GREAT WORK

ReplyDeleteGOOD CONTENT!!

SAN Solutions in Dubai

Thanks for sharing SAN solutions in dubai

ReplyDeleteĐịa chỉ mua giảo cổ lam Hòa Bình tại thủ đô

ReplyDeleteDấu hiệu bệnh tiểu đường

Triệu chứng bệnh tiểu đường

Tiệm bán hạt methi Hà Nội

Hạt methi Ấn Độ mua ở đâu Hải Dương

hi

ReplyDeleteGood job and thanks for sharing such a good blog You’re doing a great job. Keep it up !!

ReplyDeletePython Training in Chennai | Best Python Training in Chennai | Python with DataScience Training in Chennai | Python Training Courses and fees details at Credo Systemz | Python Training Courses in Velachery & OMR | Python Combo offer | Top Training Institutes in Chennai for Python Courses

For Hadoop Training in Bangalore Visit : HadoopTraining in Bangalore

ReplyDeletePrivacy HIPAA Officer Training will help to understand safeguards for keeping protected health information safe from a people, administrative, and contractual standpoint

ReplyDeletethanks for sharing such an useful and nice stuff...

ReplyDeletedata science tutorial

Thank you for your post. This is excellent information. It is amazing and wonderful to visit your site.Salesforce CRM Training in Bangalore

ReplyDeleteAwesome,Thank you so much for sharing such an awesome blog.Power BI Training in Bangalore

ReplyDeleteThanks for sharing this blog. This very important and informative blog. Puppet Training in Bangalore

ReplyDeleteThank you for sharing such a nice post!

ReplyDeleteUpgrade your career Learn Mulesoft Training in Bangalore from industry experts get Complete hands-on Training, Interview preparation, and Job Assistance at Softgen Infotech.

I am happy for sharing on this blog its awesome blog I really impressed. Thanks for sharing. Great efforts.

ReplyDeleteBest SAP HANA Training in Bangalore

Best SAP HANA Admin Training in Bangalore

Best SAP GRC Training in Bangalore

Best SAP S4 HANA Training in Bangalore

Best SAP S4 HANA Simple Finance Training in Bangalore

Best SAP S4 HANA Simple Logistics Training in Bangalore

Such a great word which you use in your article and article is amazing knowledge. thank you for sharing it.

ReplyDeleteBest SAP Training in Bangalore

Best SAP ABAP Training in Bangalore

Best SAP FICO Training in Bangalore

Best SAP HANA Training in Bangalore

Best SAP MM Training in Bangalore

Best SAP SD Training in Bangalore

Really i appreciate the effort you made to share the knowledge. The topic here i found was really effective...

ReplyDeleteBest SAP HR Training in Bangalore

Best SAP BASIS Training in Bangalore

Best SAP HCM Training in Bangalore

Best SAP S4 HANA Simple Finance Training in Bangalore

Best SAP S4 HANA Simple Logistics Training in Bangalore

Great post!I am actually getting ready to across this information,i am very happy to this commands.Also great blog here with all of the valuable information you have.Well done,its a great knowledge.

ReplyDeletedigital marketing course in chennai

SKARTEC Digital Marketing

best digital marketing training in chennai

seo training in chennai

online digital marketing training

best marketing books

best marketing books for beginners

best marketing books for entrepreneurs

best marketing books in india

digital marketing course fees

best seo service in chennai

SKARTEC SEO Services

digital marketing resources

digital marketing blog

digital marketing expert

how to start affiliate marketing

what is affilite marketing and how does it work

affiliate marketing for beginners

Great Blog Thanks for sharing...such a helpful information keep sharing these type of blogs...

ReplyDeleteDigital Marketing Training in Bangalore

Great information!!! Thanks for your wonderful informative blog...

ReplyDeleteDigital Marketing Courses in Bangalore

Really awesome blog!!! I finally found great post here. Nice article on data science . Thanks for sharing your innovative ideas to our vision. your writing style is simply awesome with useful information. Very informative, Excellent work! I will get back here.

ReplyDeleteData Science Course in Marathahalli

Study Data Scientist Course in Bangalore with ExcelR where you get a great experience and better knowledge.

ReplyDeleteData Scientist Course

Effective blog with a lot of information. I just Shared you the link below for Courses .They really provide good level of training and Placement,I just Had Hadoop Classes in this institute , Just Check This Link You can get it more information about the Hadoop course.

ReplyDeleteJava training in chennai | Java training in annanagar | Java training in omr | Java training in porur | Java training in tambaram | Java training in velachery

Personally I think overjoyed I discovered the blogs.

ReplyDeletedata science course

360DigiTMG

This was really one of my favorite website. ExcelR Machine Learning Course In Pune Please keep on posting.

ReplyDeleteNice post and valuable information thank you.

ReplyDeleteData Analytics Course in Pune

Data Analytics Training in Pune

I am really enjoying reading your well written articles. It looks like you spend a lot of effort and time on your blog. I have bookmarked it and I am looking forward to reading new articles. Keep up the good work.

ReplyDeleteData Science Training

I will really appreciate the writer's choice for choosing this excellent article appropriate to my matter. Here is deep description about the article matter which helped me more.

ReplyDeleteData Science Course in Bangalore

Great post i must say and thanks for the information.

ReplyDeleteData Science Course in Hyderabad

thanks for sharing.great article poster blog.keep posting like this.We are the Best Digital Marketing Agency in Chennai, Coimbatore, Madurai and change makers of digital! For Enquiry Contact us @+91 9791811111

ReplyDeletedigital marketing consultants in chennai | Leading digital marketing agencies in chennai | digital marketing agencies in chennai | Website designers in chennai | social media marketing company in chennai

ReplyDeleteSuch a very useful article. Very interesting to read this article.I would like to thank you for the efforts you had made for writing this awesome article

Data Science Training In Chennai | Certification | Data Science Courses in Chennai | Data Science Training In Bangalore | Certification | Data Science Courses in Bangalore | Data Science Training In Hyderabad | Certification | Data Science Courses in hyderabad | Data Science Training In Coimbatore | Certification | Data Science Courses in Coimbatore | Data Science Training | Certification | Data Science Online Training Course

Excellent Blog! I would like to thank for the efforts you have made in writing this post. I am hoping the same best work from you in the future as well. I wanted to thank you for this websites! Thanks for sharing. Great websites!. Learn best Ethical Hacking Course in Bangalore

ReplyDelete

ReplyDeleteI am reading your post from the beginning, it was so interesting to read & I feel thanks to you for posting such a good blog, keep updates regularly.I want to share about weblogic administration .

ReplyDeleteI am reading your post from the beginning, it was so interesting to read & I feel thanks to you for posting such a good blog, keep updates regularly. I want to share about weblogic training .

Thank you for this comparative review? From this review it helps me to understand more about the issues I am investigating. Hope you will share more reviews or comparison articles or other relevant information: Nguyên nhân bé bị loạn khuẩn đường ruột, Máy xông tinh dầu Hello Kitty 2020, Uống nhiều rượu có thể làm tăng nguy cơ ung thư phổi, Béo phì có dẫn đến tiểu đường không, Những người không nên uống thuốc tránh thai, Hướng dẫn làm đồ ăn cho bé ăn dặm, Máy ép dầu gia đình Trung Quốc - 2020, Máy ép hoa quả Nanifood,...................

ReplyDeletewonderful article. I would like to thank you for the efforts you had made for writing this awesome article. This article resolved my all queries. data science courses

ReplyDeletevery nice article,thank you for sharing this awesome articlw with us.

ReplyDeletekeep updating,...

big data online training

This is a wonderful article, Given so much info in it, These type of articles keeps the users interest in the website, and keep on sharing more ... good luck.

ReplyDeleteSimple Linear Regression

Correlation vs Covariance

bag of words

time series analysis

ReplyDeleteThis was definitely one of my favorite blogs. Every post published did impress me. ExcelR Data Analytics Courses

Amazing post , thanks for sharing with us

ReplyDeleteBest Digital Marketing Training in Bangalore

I read this article fully on the topic of the resemblance of most recent and preceding technologies, it’s remarkable article.

ReplyDeleteRecliner sofa set in Bangalore

I think this is an informative post and it is very useful and knowledgeable. therefore, I would like to thank you for the efforts you have made in writing this article.

ReplyDeleteSEO Cheltenham

SEO Agency Gloucester

SEO Gloucester

Web Design Gloucester

Modern service desk to auto-resolve employee tickets 10x faster

ReplyDeleteAs your business grows, your IT support team has to stay more systematized and effective. In a traditional service desk, employees have to go through various steps to get their queries/requests related to IT, equipment, or other departments. They have to frequently check the notifications of email and portal to receive updates on their support ticket. In such scenarios, employees end up alerting their IT desk support team directly. This takes more time to resolve the issues faced by employees and ultimately affects the company's operations badly.

Implementing a modern service desk allows employees to communicate and collaborate with a chatbot or Virtual Assistant (VA) that prioritizes and auto-resolves issues. Employees across all departments can ask questions and get quick help in real-time with ease, allowing them to concentrate more on their works without any confusion and enhance the agent's productivity—right information to the right people from the right place.

Rezolve.ai, integrated with Microsoft Teams, will help your organization combine streamlined collaboration and contextual ticketing to provide the best user experiences. Rezolve.ai auto-resolve your employee queries and issues faster and augments your ability to reach solutions in seconds.

Create, manage, and auto-resolve tickets 10x faster with Rezolve.ai within Teams.

Click here: ai service desk

Hi,

ReplyDeleteThanks for sharing the amzing informative blog.

Data Science Training in Pune

Hi, Actually I read it yesterday but I had some ideas about it and today I wanted to read it again because it is so well written.

ReplyDeleteIoT Training

ReplyDeleteReally nice and interesting post. I was looking for this kind of information, enjoyed reading this one thank you.

Artificial Intelligence Companies in Bangalore

ReplyDeleteWe came up with a great learning experience of Azure training in Chennai, from Infycle Technologies, the finest software training Institute in Chennai. And we also come up with other technical courses like Cyber Security, Graphic Design and Animation, Big Data Hadoop training in Chennai, Block Security, Java, Cyber Security, Oracle, Python, Big data, Azure, Python, Manual and Automation Testing, DevOps, Medical Coding etc., with great learning experience with outstanding training with experienced trainers and friendly environment. And we also arrange 100+ Live Practical Sessions and Real-Time scenarios which helps you to easily get through the interviews in top MNC’s. for more queries approach us on 7504633633, 7502633633.

This comment has been removed by the author.

ReplyDeleteAll things considered I read it yesterday yet I had a few musings about it and today I needed to peruse it again in light of the fact that it is very elegantly composed.data analytics courses malaysia

ReplyDeleteThank you for sharing your awesome and valuable article this is the best blog for the students they can also learn.

ReplyDeletedevops training in bangalore

devops course in bangalore

aws training in bangalore

Really impressed! Information shared was very helpful Your website is very valuable. Thanks for sharing.

ReplyDeleteFood Product Development Consultant

I think I have never seen such blogs ever before that has complete things with all details which I want. So kindly update this ever for us.

ReplyDeletefull stack developer course

Great Article bcom with cma The Bachelor of Commerce (BCom) with Certified Management Accountant (CMA) is a unique and highly sought-after academic program that blends traditional commerce education with advanced professional certification. This integrated course is designed to equip students with a comprehensive understanding of business, finance, and accounting principles while simultaneously preparing them for the globally recognized CMA certification. By pursuing BCom with CMA, students gain a competitive edge in the job market, as they acquire specialized skills in strategic financial management, cost analysis, and decision-making. This dual-qualification pathway opens doors to lucrative career opportunities in corporate finance, accounting, auditing, and consulting, both in India and abroad.

ReplyDeleteGreat Article about the top BCom colleges in bangalore . student staying or looking to study bcom in Bangalore this blog will best to clarify all the doubts.

ReplyDeleteTop-tier faculty, industry-ready curriculum—this is Bangalore’s best for MCom!

ReplyDeleteTop-tier Hospitality Management Colleges in Bangalore’s Check this!

ReplyDeleteBest MPT Specializations in 2025 read more...

ReplyDeleteStudy Bcom in bangalore top colleges with full details best bcom colleges

ReplyDeleteStudy BE/Btech Artificial Intelligence course in Bangalore from top colleges with full details best BE/Btech AI colleges

ReplyDeleteTop BBA colleges in bangalore get full details Best BBA colleges

ReplyDeleteReally nice and interesting post. I was looking for this kind of information, enjoyed reading this one thank you.

ReplyDeletetop Residential plots agents in hyderabad

Really nice and interesting post. I was looking for this kind of information, enjoyed reading this one thank you.

ReplyDeletebest honeymoon hotels in kochi

Thank u so much sharing information like this it is the best blog

ReplyDeleteChicken Kebabs near Lincoln Ave

NICE INFORMATIVE BLOG

ReplyDeleteKabab near Lincoln Ave

NICE INFORMATIVE BLOG

ReplyDeletebest plots dealers in hyderabad

NICE INFORMATIVE BLOG

ReplyDeleteFamily restaurant in kochi

NICE INFORMATIVE BLOG

ReplyDeleteDTCP layout in J P Dargah

NICE INFORMATIVE BLOG

ReplyDeleteHigh speed internet Hyderabad

NICE INFORMATIVE BLOG

ReplyDeleteinternet connection for home

NICE INFORMATIVE BLOG

ReplyDeleteHome internet Hyderabad

NICE INFORMATIVE BLOG

ReplyDeleteHMDA layout near Shadnagar

NICE INFORMATIVE BLOG

ReplyDeleteinternet connection for home in Hyderabad